研究の興味の変遷

研究概要 (2025)

My research area lies at the intersection of quantum science and theoretical particle physics. The rapid advancement of quantum science technologies is continuously introducing new solutions to problems. These include quantum metrology techniques for detecting faint signals and quantum computation for simulating dynamics by directly manipulating quantum states. In light of this, I believe it crucial to explore how these advancements can be used to investigate physics. With this in mind, I am researching: (i) direct detection of light dark matter using quantum metrology, and (ii) quantum algorithms to simulate parton shower dynamics.

One of my research areas focuses on developing methods to explore light dark matter using quantum metrology techniques. Conventional dark matter direct detection programs, which primarily focus on the $\mathrm{GeV}$-mass region, have yet to provide any evidence of dark matter. This has motivated the community to explore a broader mass range, including the sub-$\mathrm{GeV}$ scale, which remains largely unexplored, in part due to the challenges like low excitation energy and small event rates. Quantum metrology techniques offer promising ways for detecting such faint signals. By leveraging these advanced techniques, I aim to overcome current limitations in sensitivity and frequency coverage, paving the way for new approaches in light dark matter search.

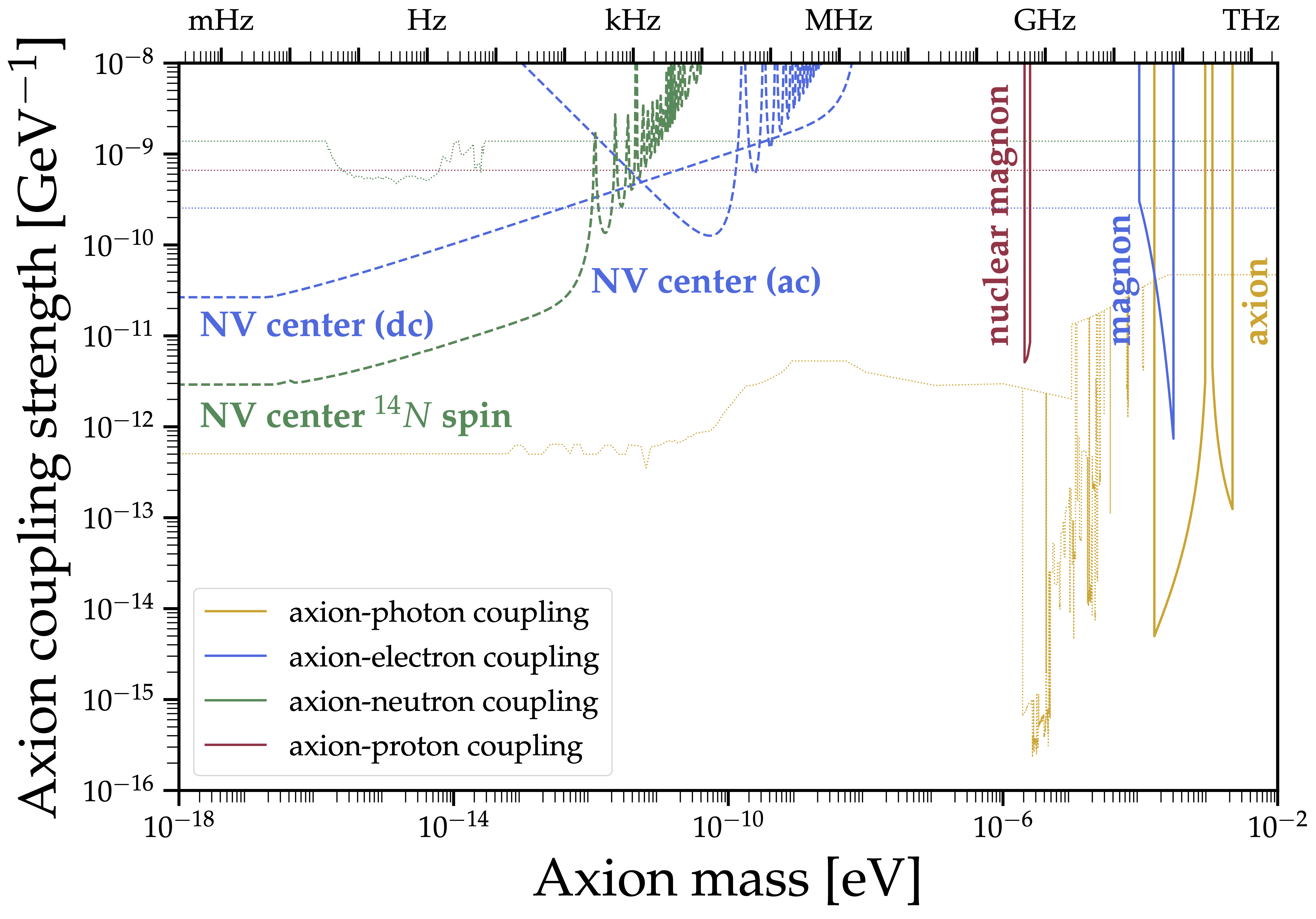

Among light dark matter candidates, I primarily focus on a pseudo-scalar candidate often referred to as axion dark matter, which is strongly motivated from a high-energy theoretical perspective. The axion provides a compelling solution to the puzzle of conserved CP symmetry in the strong interaction sector. It can also naturally emerge from an ultraviolet completion of the theory including gravity, the string theory. From a metrological perspective, the axion is particularly intriguing because its interaction with ordinary fields mimics electromagnetic fields while exhibiting several distinctive features that allow it to be distinguished from conventional electromagnetic fields. These features include a spin-dependent coupling strength entirely uncorrelated with the gyromagnetic ratio and a signal coherence time directly correlated with its frequency. In this context, my research aims to develop quantum metrology protocols that fully exploit these distinctive characteristics of the axion dark matter signal to maximize its detection potential. In Fig. 1a, I provide a summary plot illustrating the frequency coverage of various approaches I have investigated. Below, I will detail these approaches with reference to this figure.

Fig. 1a:

Summary of the frequency coverage of various approaches discussed in the main text.

The prospects for the pseudo-scalar (axion) dark matter are shown for the purpose of demonstration.

Each result, represented by a solid or dashed line, should be compared with the current constraint, which is plotted as a dashed line of the same color corresponding to the same coupling.

Fig. 1a:

Summary of the frequency coverage of various approaches discussed in the main text.

The prospects for the pseudo-scalar (axion) dark matter are shown for the purpose of demonstration.

Each result, represented by a solid or dashed line, should be compared with the current constraint, which is plotted as a dashed line of the same color corresponding to the same coupling.

I have explored three distinct approaches utilizing different collective excitations of spins: magnon [1], axion [2], and nuclear magnon [3], each probing different dark matter couplings. The frequency coverage of these approaches is shown by solid lines in Fig. 1a. If the dark matter mass lies in the challenging sub-$\mathrm{THz}$ range, approaches using these excitations provide one of the few valuable detection opportunities. Many ongoing experiments, including QUAX and TOORAD experiments, are currently searching for spin excitations with similar concepts, which may ultimately lead to the discovery of dark matter.

In Refs. \cite{Chigusa:2023hms}, [4], I proposed a light dark matter search using nitrogen-vacancy center magnetometry. This specialized quantum metrology technique aids in developing new approaches with broad frequency coverage and/or improved sensitivity, which are briefly summarized in dashed lines in Fig. 1a. My approach leverages the sensitivity of nitrogen-vacancy centers to various spin species, clearly shown by different colors of dashed lines, and offers a novel way to distinguish magnetic noise from dark matter signals. Recently, we launched an experiment based on these ideas at the International Center for Quantum-field Measurement Systems for Studies of the Universe and Particles (QUP). This experimental collaboration has published a paper on a data analysis method for incoherent signals \cite{10.1063/5.0223678}, motivated by dark matter, and is now moving towards cryogenic experimental operation.

Among the quantum techniques designed to surpass the standard quantum limit and approach the Heisenberg limit, I focus on squeezing and entanglement. I explored the possibility of enhancing the nuclear magnon signal excited in superfluid $\mathrm{^3He}$ through squeezing and identified conditions where this improves sensitivity [5]. These conditions must be carefully examined to assess the potential of squeezing for spin-based dark matter searches, including [1], [2], [3], where the signal coherence time is limited. Regarding entanglement, while certain entangled states, such as the Greenberger–Horne–Zeilinger state, are known as a way to achieve the Heisenberg limit, they are often vulnerable to Markovian noise, negating the advantage of entanglement. I investigated this issue in the context of dark matter searches [6], identifying situations where entangled states can enhance sensitivity even in the presence of noise, leaving further optimization as a future direction.

My future project plans involve the continued development of metrology techniques to expand their applicability to new physics. I intend to develop metrology techniques that integrate error correction and quantum correlation, extensively studied in particular in the context of quantum computation and clock synchronization, to enhance sensitivity to signals with multiple unknown properties. Additionally, building on the methodologies developed for light dark matter detection, I plan to apply similar concepts to other targets, such as high-frequency gravitational waves and the cosmic axion background. These efforts will position my research to contribute significantly to the broader field of quantum metrology and its applications in uncovering new physics.

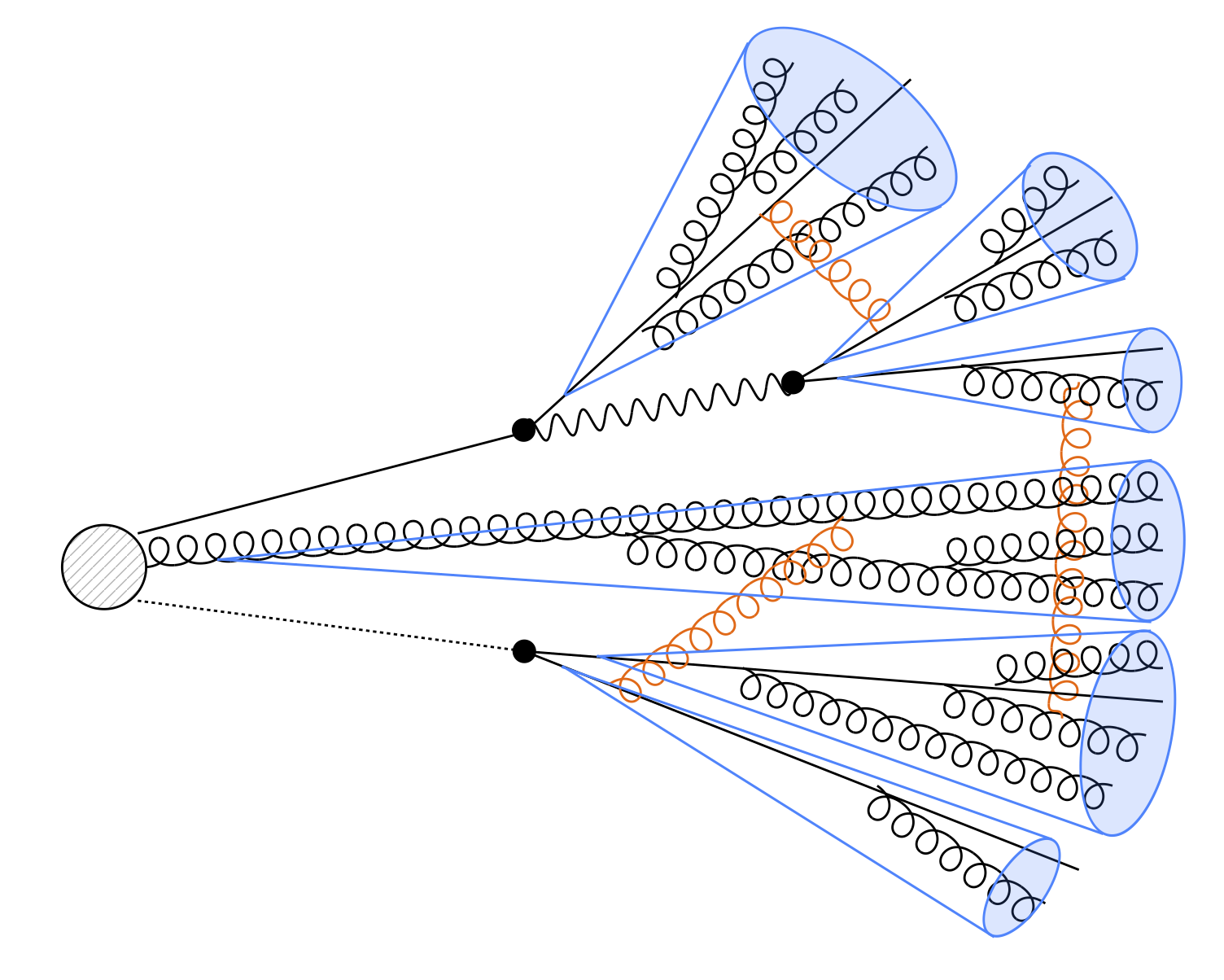

Fig. 2a:

A schematic illustration of a multi-emission process that incorporates quantum interference effects beyond the classical parton shower treatment.

The leading-order contribution to inclusive parton shower dynamics is fully captured by blue cones, which represent collinear emissions and can be treated independently.

At the next-to-leading order, however, soft interference effects, depicted by orange lines in the figure, must be considered, potentially leading to global event-wise entanglement.

Fig. 2a:

A schematic illustration of a multi-emission process that incorporates quantum interference effects beyond the classical parton shower treatment.

The leading-order contribution to inclusive parton shower dynamics is fully captured by blue cones, which represent collinear emissions and can be treated independently.

At the next-to-leading order, however, soft interference effects, depicted by orange lines in the figure, must be considered, potentially leading to global event-wise entanglement.

Another direction of my research focuses on developing quantum algorithms to study quantum dynamics. Today, quantum computing resources with a substantial number of qubits are publicly accessible, and their availability is steadily increasing. Given this, now is the ideal time to explore quantum algorithms for physics research. Leveraging this opportunity, my research aims to push the boundaries of quantum simulations to better understand complex physical systems.

As an important example of systems with intriguing quantum properties, I work on parton showers. The original parton shower algorithm is a classical approach that has been widely used for multi-emission processes (see Fig. 2a for a schematic illustration) in collider and astroparticle physics. However, it fails to incorporate important quantum interference effects, which can significantly alter the particle multiplicity distribution, especially in the presence of a non-trivial flavor structure [7]. To address this issue, I developed a quantum parton shower algorithm using a veto procedure [8], which can incorporate the exponentially growing number of diagrams while utilizing polynomial quantum resources. This is the first quantum algorithm capable of reconstructing full kinematic information, making an important first step towards realistic quantum simulations of parton shower dynamics.

I plan to further develop quantum algorithms addressing both computational and physical aspects. The sampling method for the evolution variable, the virtuality, can be optimized to reduce the gate cost, although it distorts quantum states due to the artificial veto procedure. I am developing an algorithm to restore the correct quantum state with an improved sampling method. Additionally, I intend to develop algorithms that incorporate next-to-leading order effects, extending beyond the collinear emissions represented by blue cones in Fig. 2a. To achieve this, soft interference effects must be properly accounted for by storing the emission history in qubits. This approach fully leverages the advantages of quantum computing because of the possibility of global event-wise entanglement, illustrated by the orange lines in Fig. 2a that connect the blue cones. By developing these algorithms, I aim to create a comprehensive toolkit for quantum simulations of scattering processes at high-energy colliders, properly incorporating quantum interference effects.

The program for exploring new physics should evolve alongside rapid technological advancements of quantum science. By incorporating advanced quantum metrology techniques and developing quantum algorithms, I aim to develop innovative approaches for exploring physics with the ultimate goal of contributing to a deeper understanding of the universe's fundamental mysteries.

研究概要 (2024)

Nowadays, the improvement of technologies in quantum science is so rapid that various new approaches to problems are becoming available in a short period. These new approaches include quantum sensing techniques to detect a faint signal and quantum computation that allows us to simulate the dynamics of quantum systems by directly manipulating quantum states. Given this situation, it is incumbent on theoretical particle physicists to stay in touch with new technologies and to develop ways to use them in the search for beyond the standard model physics. I have been working along this line; I mainly study the phenomenology of the standard model and beyond, including the dark matter search and important quantum corrections to the parton shower process using various quantum technologies. My main research directions are categorized as (i) direct detection of light dark matter with quantum sensing techniques and (ii) construction of quantum algorithms applicable to systems such as parton shower. In addition, as (iii) other directions, I work on several different approaches to further explore beyond the standard model physics, including false vacuum decay rate calculation and collider phenomenology.

The conventional dark matter direct detection programs, which mainly focus on the $\mathrm{GeV}$-mass region, give us no sign of dark matter so far. This motivates the community to explore different dark matter mass window, which is widely open, especially towards the lighter region. However, direct detection of light dark matter is challenging because of the low excitation energy and the small event rate. Quantum sensing techniques are useful to detect such a faint signal and also to amplify it to improve the sensitivity.

If the dark matter has a wavelength longer than the interatomic spacing, the dark matter collision with a material excites collective modes such as phonons and magnons. Along this line, I worked on three different approaches using different collective excitation signal of spins, i.e., the magnon excitation [1], the axion-like excitation [2], and the nuclear magnon excitation [3], each of which probe different dark matter couplings. Many ongoing experiments are searching for spin excitations with the same or similar ideas, which might discover the dark matter. In addition, as part of an attempt to accurately identify the background events of many ongoing experiments based on phonons, I studied the lattice defects as a possible source of $\mathrm{eV}$-scale background events \cite{Frenkel}.

In this context, more specialized quantum sensing techniques are useful to develop a new approach with different target frequency or to enhance the dark matter signal. In Ref. [4], I proposed the light dark matter search based on the nitrogen-vacancy center magnetometry, which has a broad frequency coverage. Recently, an experiment based on this idea has been launched in the International Center for Quantum-field Measurement Systems for Studies of the Universe and Particles (QUP). I also studied the possibility of enhancing the nuclear-magnon signal excited in superfluid $\mathrm{^3He}$ with squeezing and revealed the condition for sensitivity improvement [5].

Similarly to [5], squeezing of the spin state could have potential to enhance sensitivities of the setups using spin excitation considered in [1], [2], [3]. Thus, it is useful to consider what kind of measurement can be performed to profit from this approach. Another important ingredient of quantum sensing techniques is entanglement. If $N$ qubits are available for measurement, entanglement allows the event rate to scale ideally as $\propto N^2$, contrary to the naive anticipation $\propto N$. Since the recent technologies have already achieved entanglement among multiple qubits, it is an important task to think of the best setup with entanglement taking account of non-trivial effects on experimental parameters such as increased decoherence time. New ideas should be proposed not only to improve sensitivity but also to explore a broad mass range or to explore various dark matter couplings. Finally, similar ideas are applicable to other targets including cosmic axion background and high-frequency gravitational waves, as partly discussed in [6].

Nowadays, quantum computing resources with a sizable number of qubits are open to the public. Combined with the rapid improvement of technologies that promises the near-future potential of quantum computers as a tool to investigate the dynamics of quantum systems, now is the time to study possible quantum algorithms we can equip on the current and near-future quantum computers.

Parton shower algorithm is a classical approach to computing the multi-emission cross sections, which has been widely used for collider and astroparticle physics simulations. However, because the algorithm is based on the classical probability distribution, important quantum interference effects are not incorporated under the existence of a non-trivial flavor structure of fermions, which could significantly modify the particle multiplicity distribution [7]. In addition, due to the flavor index assignment, the number of diagrams we need to calculate grows exponentially as particle multiplicity increases, and the computational cost of classical calculation also increases exponentially. Motivated by this, I investigated a quantum parton shower algorithm using a veto procedure. This is the first quantum algorithm that operates on polynomial computational resources while accounting for quantum interference effects and reconstructing full kinematics information [8].

The quantum veto algorithm studied in [8] still has a large room for improvement in both computational and physical aspects. Although the evolution variable of parton shower, the virtuality, is discretized in the current algorithm, one can in principle sample the virtuality by using the exponentiated probability density as is done in classical parton shower algorithms, which speeds up the simulation further. Also, a physically more involved algorithm with soft interference could be constructed if the history information, the list of partons that emit at each step, is stored in qubits. Finally, this research project can be extended to simulation of non-perturbative dynamics, which is often difficult to treat in conventional methods. When direct observation of the dynamics is difficult, such as in the case of false vacuum decay, the quantum simulation itself could serve as a proof of concept.

It is also important to combine quantum algorithms with quantum sensing to, e.g., improve the sensitivity to the light dark matter signal. One example of the possibly useful algorithms is the amplitude amplification, which allows the probability for signal detection to scale ideally as $\propto N^2$ with the $N$ repeated measurements.

The observed value of the Higgs mass $M_h \simeq 125\,\mathrm{GeV}$ results in the Higgs self-coupling $\lambda$ running to negative according to the standard model renormalization group flow, which indicates that the electroweak vacuum of the standard model is not absolutely stable. Whether the lifetime of the electroweak vacuum is longer than the age of the universe or not, the so-called electroweak vacuum stability, should be properly judged to test if the physics of the standard model and beyond is compatible with our universe. On the other hand, false vacuum decay rate calculation at the next-to-leading order tends to contain complicated differential equations that are computationally hard to solve and divergences sourced from symmetries that are conceptually difficult to treat.

In [9], [10], I overcame these technical difficulties and obtained a semi-analytic one-loop expression of the electroweak vacuum decay rate in the standard model and beyond without additional Higgs bosons. This result allows us to calculate the decay rate much faster and more precisely than in the past. I generalized this result in [11] and provided the first semi-analytic expression of the one-loop vacuum decay rate in the general gauge theory. I used this expression to test the electroweak vacuum decay in a setup of the minimal supersymmetric standard model that can resolve the possible muon $g-2$ tension and obtained an upper bound on the mass of certain new particles [12], [13]; a part of the allowed mass range can be searched for by the future collider experiments.

One of the recently evolving computational tools is machine learning, which is suitable for analyzing the huge and complicated data of collider experiments. I adopted this tool to achieve better preselection of the Higgs boson at future lepton colliders, which will be the basis technology for the Higgs study program. I compared the performance of this approach with that of the traditional boosted decision tree approach and concluded that $\mathcal{O}(10)\,\%$ improvement can be expected [14]. Also, a large amount of effort has been devoted to realizing the muon collider, which has the potential to reach higher energy than electron-positron colliders, while maintaining cleaner signals than hadron colliders. I explored a way to look for a new particle that couples dominantly to top quarks through four-top events and the resonance peak search in the muon collider. I showed that the tendency of having more boosted top jets is a clear advantage of the high-energy muon collider against the large hadron collider, despite the challenges such as the beam-induced background [15].

The field of quantum science is now in an interesting time with many new experimental technologies and theoretical ideas coming out daily. I intend to take full advantage of the opportunity to develop new ideas for phenomenological studies of particle physics based on the rapid developments in quantum science, aiming to unravel the nature of the universe.